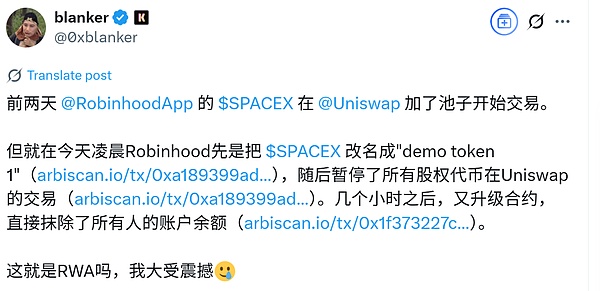

I read a post on X.com about Robinhood's stock tokenization project on Uniswap allegedly running away, claiming it could erase the token balance of holding addresses. I was skeptical about the balance erasure claim, so I asked ChatGPT to investigate.

ChatGPT provided a similar judgment, stating that the description of balance erasure is unlikely.

What truly surprised me was ChatGPT's reasoning process, as I wanted to understand how it arrived at its conclusion, so I examined its reasoning chain.

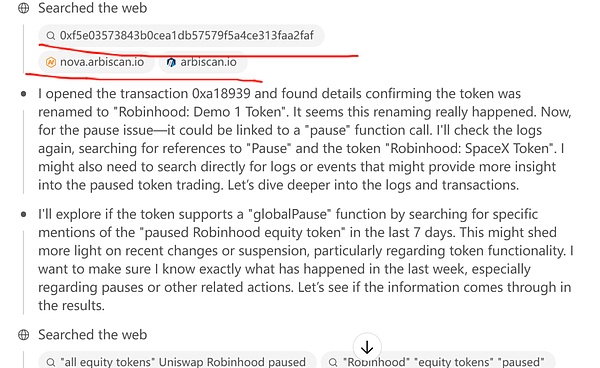

I noticed in its reasoning chain that it involved several steps of "inputting" an Ethereum address into a block explorer and then checking the address's transaction history.

Please pay special attention to the "inputting" in quotes, which is a verb, indicating that ChatGPT performed an operation on the block explorer. This was surprising to me, as it contradicts my security research on ChatGPT from six months ago.

Six months ago, using the ChatGPT O1 Pro model, I had attempted to research the distribution of early Ethereum profits. I explicitly asked ChatGPT O1 Pro to query the genesis block address and check how much remained unspent, but ChatGPT clearly told me it could not perform such an operation due to security design.

ChatGPT can read pages but cannot perform UI operations like clicking, sliding, or inputting actions - actions that humans can do on a webpage's UI functions, such as logging into Taobao.com or searching for specific items. ChatGPT is explicitly prohibited from simulating UI events.

This was my research result from six months ago.

Why did I research this? Because at the time, Claude's company had developed an agent that could take over a user's computer, and Anthropic announced an experimental feature "Computer use (beta)" for Claude 3.5 Sonnet, where Claude could read screens, move cursors, click buttons, and input text like a human, completing web searches, form filling, and ordering.

This is quite scary. I imagined a scenario where Claude might suddenly go crazy and directly enter my note-taking software to read all my work and life logs, potentially uncovering private keys I might have recorded in plain text for convenience.

After that research, I decided to buy a completely new computer to run AI software and stopped running AI software on my crypto computer. As a result, I now have an additional Windows computer and an Android phone, which is frustrating - a pile of computers and phones.

Now, domestic mobile phone terminals already have similar permissions. Yu Chengdong recently made a video promoting Huawei's Xiao Yi, which can help users book flight tickets and hotels on mobile phones. Honor phones even allowed users to use AI commands to complete the entire ordering process on Meituan a few months ago.

If such an AI can help you order on Meituan, can it also read your WeChat chat records?

This is quite frightening.

Because our phones are terminals, and AI like Xiao Yi runs on edge-side small models, we can still manage AI permissions, such as prohibiting AI from accessing photo albums. We can also encrypt specific apps, like encrypting notes documents that require a password to access, which can prevent Xiao Yi from directly accessing them.

However, for cloud-based large models like ChatGPT and Claude, if they gain permissions to simulate UI clicks, slides, and inputs, that would be troublesome. Because ChatGPT communicates with cloud servers at all times, meaning the information on your screen is 100% uploaded to the cloud, which is completely different from edge-side models like Xiao Yi that only read information locally.

Edge-side models like Xiao Yi are like giving a computer expert next to you your phone to help operate apps, but this expert cannot copy your phone's information home, and you can always take your phone back. In fact, asking someone to fix a computer is a common occurrence, right?

But cloud-based LLMs like ChatGPT are equivalent to remotely controlling our phones and computers, like someone remotely taking over your computer and phone. Just imagine how big the risk is - they can do anything on your phone and computer without your knowledge.

After seeing ChatGPT's thought chain simulate an "input" action on the block explorer (ARBscan.io), I was shocked. I quickly asked ChatGPT how it completed this action. If ChatGPT wasn't lying to me, then this time I was unnecessarily worried. ChatGPT did not obtain permission to simulate UI operations. This time, it could access ARBscan.io and "input" an address to view transaction records, which was purely a hack technique. I can't help but be amazed at how awesome ChatGPT o3 is.

ChatGPT o3 discovered the pattern of generating input address search history pages on ARBscan.io. The URL pattern for querying specific transactions or contract addresses on ARBscan.io is like this (https://arbiscan.io/tx/ or /address/). After understanding this pattern, ChatGPT o3 directly appended a contract address to arbiscan.io/address, then opened the page and could directly read the information.

Wow.

It's like when we check a transaction's information on a block explorer, we don't input the transaction txhash and press enter to view. Instead, we directly construct the URL of the page we want to see and then input it into the browser.

Isn't that awesome?

So, ChatGPT did not break the restriction on simulating UI operations.

However, if we truly care about computer and mobile security, we must be careful about the permissions these LLM large language models have on terminals.

We need to disable various AIs on terminals with high security requirements.

Especially note that "where the model runs (terminal or cloud)" determines the security boundary more than the model's intelligence—this is the fundamental reason why I would rather set up an isolated device than let a cloud-based large model run on a computer with my private keys.